The Cognitive Biases Sabotaging Your Product Decisions (And How to Counter Them)

Practical techniques to overcome the invisible forces that undermine even the most data-driven product teams

Every product decision you make is quietly influenced by cognitive biases you might not even recognize. Despite data-driven approaches and structured frameworks, our brains still take shortcuts that distort our judgment.

During my years at Booking.com, Eneco, Foodics and 7awi. I've seen how these invisible biases derail even the most carefully planned product strategies. The most dangerous part? We rarely notice them until the damage is done.

Let me show you the most damaging biases in product management and practical techniques to overcome them.

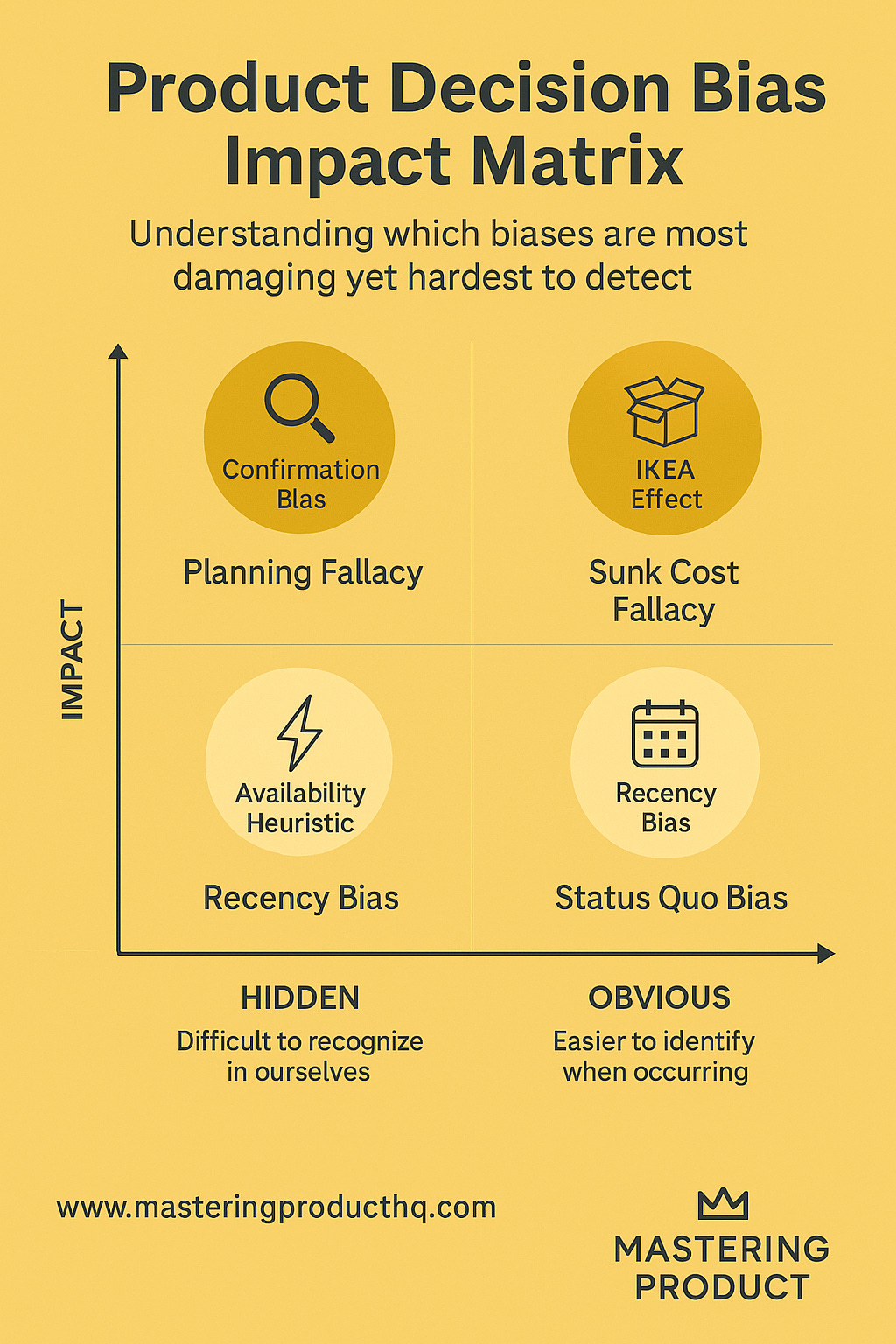

1. Confirmation Bias: Seeing What You Want to See

The Bias: You seek out information that supports your existing beliefs while ignoring contradictory evidence.

How It Sabotages Product Decisions:

You interpret user research selectively to validate your product vision

You dismiss critical feedback that challenges your assumptions

You give more weight to data that aligns with your hypotheses

Real-World Example: At Booking.com, we were convinced a new feature would drive conversion improvements. Our initial research seemed positive, reinforcing our belief. After launch, we saw virtually no impact. We had unconsciously filtered user feedback, focusing on positive signals while dismissing subtle indicators that users didn't actually value the feature.

Practical Countermeasures:

Assign a dedicated devil's advocate in every product discussion

Ask explicitly: "What would prove this hypothesis wrong?"

Set success criteria before seeing results

Review data blindly when possible, without knowing which variant is which

Try This Today: Before your next major decision, answer these four questions:

Have I actively looked for evidence that contradicts my position?

Can I articulate the strongest case against my decision?

Have I consulted someone with a different perspective?

Would I make the same decision if the data pointed in the opposite direction?

2. Sunk Cost Fallacy: The Persistence Trap

The Bias: You continue investing in something based on past investment rather than future value.

How It Sabotages Product Decisions:

You keep developing features that data shows aren't valuable

You persist with rejected product strategies

You refuse to pivot because "we've already put so much into this"

Real-World Example: We once spent six months developing a complex analytics dashboard our initial research suggested customers wanted. Three months in, feedback indicated a much simpler solution would better meet customer needs. We continued anyway because "we've already done half the work." The result? An over-engineered product with poor adoption.

Practical Countermeasures:

Schedule regular go/no-go decision points in your development process

Evaluate decisions based on future value, not past investment

Break initiatives into smaller, independently valuable increments

Recognize teams that make the tough call to kill underperforming initiatives

Try This Today: For your current project, conduct a "Fresh Start Review":

If we were starting today with what we now know, would we still make the same decision?

What would we do differently if we had no prior investment?

What could we do with these resources if we stopped this work today?

3. The IKEA Effect: Overvaluing Your Own Creations

The Bias: You place higher value on things you helped create, regardless of their objective quality.

How It Sabotages Product Decisions:

You overestimate the value of features you personally conceived

You resist feedback suggesting your creations need significant changes

You prioritize your ideas over potentially better alternatives

Real-World Example: I once spent weeks designing what I thought was an elegant solution to a complex user problem. When user feedback was lukewarm, I convinced myself they "just didn't get it yet" and pushed for launch with minimal changes. The feature saw poor adoption and eventually required a complete redesign based on feedback I should have heeded initially.

Practical Countermeasures:

Have different team members evaluate ideas than those who created them

Implement blind evaluation processes where ideas are judged without knowing their source

Create multiple solutions to the same problem

Define success in terms of user impact, not feature completion

Try This Today: For your current favorite feature or idea, apply the "Proud Parent Test":

Would I be equally enthusiastic if someone else had proposed this?

Am I defending this based on its merits or because it's "my baby"?

Can I name three significant limitations in my own idea?

4. Recency Bias: The Latest is the Greatest

The Bias: You give too much importance to recent events or information compared to older data.

How It Sabotages Product Decisions:

You dramatically shift priorities based on the latest customer feedback

You overreact to recent competitor moves

You make decisions based on short-term metrics fluctuations rather than sustained patterns

Real-World Example: After one vocal customer complained about a specific feature gap, we immediately reprioritized our roadmap to address it. We later discovered this issue affected less than 2% of users, while we delayed work on problems impacting over 50% of our user base.

Practical Countermeasures:

Keep a decision journal documenting context and rationale

Implement a "cooling-off period" for decisions triggered by recent events

Review longer-term data trends before shifting priorities

Create a systematic feedback aggregation process

Try This Today: Before pivoting based on new information, ask:

How does this compare to what we've heard over the past 3-6 months?

Is this a pattern or an isolated data point?

Would we make this same decision if we'd received this information a month ago?

5. Availability Heuristic: The Vividness Trap

The Bias: You overestimate the importance of things that come readily to mind because they're recent, unusual, or emotionally charged.

How It Sabotages Product Decisions:

You overemphasize dramatic but rare edge cases in product design

You give too much weight to memorable feedback while ignoring common but less vivid experiences

You focus on highly visible but less impactful product issues

Real-World Example: After a high-profile security incident at a competitor, our team spent months implementing elaborate security features that diverted resources from more pressing user needs. The vivid nature of the breach made the risk seem more immediate than data suggested.

Practical Countermeasures:

Quantify frequency and impact of issues rather than relying on anecdotes

Create a "problem bank" that systematically tracks issues with data on frequency and severity

Use structured prioritization frameworks like RICE

Proactively research problems users aren't actively reporting

Try This Today: When a vivid problem captures your attention, conduct a "Frequency Check":

How often does this actually occur?

What percentage of users are affected?

How does this compare to other issues in terms of frequency and impact?

6. Planning Fallacy: Systematic Underestimation

The Bias: You underestimate time, costs, and risks while overestimating benefits.

How It Sabotages Product Decisions:

You create unrealistic development timelines

You underestimate implementation complexity and technical debt

You overestimate feature impact on key metrics

You set unrealistic expectations with stakeholders

Real-World Example: We once estimated a major platform migration would take three months. Despite adding a 20% buffer, it took seven months. We failed to account for unexpected integration challenges, competing priorities, and learning curves with new technologies.

Practical Countermeasures:

Use reference class forecasting by looking at how long similar projects actually took

Implement "pre-mortem" technique to identify potential failures before they occur

Break work into smaller increments with more predictable timelines

Track estimate accuracy over time

Try This Today: For your next significant initiative, apply the "Historical Doubling Rule":

Look at similar past projects and note estimated vs. actual timelines

If no similar projects exist, double your initial estimate

Create ranges rather than point estimates (e.g., "4-6 weeks" not "5 weeks")

Identify specific risks that could extend the timeline

7. Status Quo Bias: Resistance to Change

The Bias: You prefer the current state of affairs and see changes as losses.

How It Sabotages Product Decisions:

You resist potentially valuable product pivots

You maintain legacy features that no longer serve users

You avoid bold innovations in favor of incremental improvements

You dismiss disruptive ideas that challenge existing mental models

Real-World Example: Despite data showing our product's navigation structure was causing user confusion, our team resisted a redesign for over a year. We overestimated the disruption a change would cause. When we finally implemented a more intuitive navigation, user satisfaction increased significantly. We regretted not making the change sooner.

Practical Countermeasures:

Regularly ask: "If we weren't already doing this, would we start?"

Implement sunset reviews for existing features

Create space for experimentation through innovation sprints

Use the "reversible decision" framework to reduce perceived risk

Try This Today: Use the "Clean Slate Exercise":

If you were building this product from scratch today, what would you do differently?

Which current features exist primarily because "that's how we've always done it"?

What would a new competitor without your legacy constraints do?

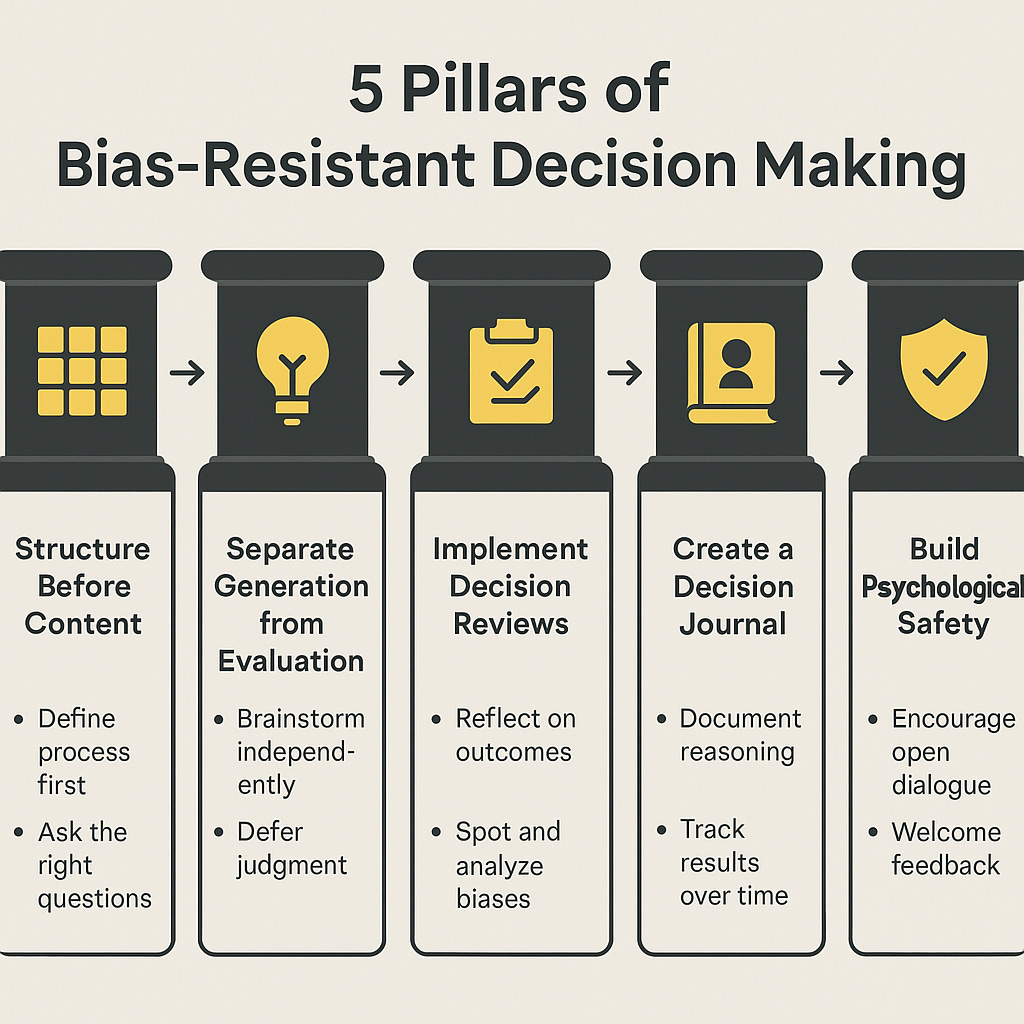

Building a Bias-Resistant Decision Process

While understanding individual biases helps, the most effective approach is building a decision-making process that systematically counters multiple biases:

Structure Before Content

Define how a decision will be made before discussing what the decision should be

Establish evaluation criteria in advance

Clarify decision-making authority

Separate Generation from Evaluation

Create distinct phases for generating options, gathering information, evaluating options, and deciding

This prevents premature convergence and reduces ownership bias

Implement Decision Reviews

Compare actual outcomes to expected outcomes

Identify which assumptions proved correct or incorrect

Document learnings to improve future decisions

Create a Decision Journal

Record significant product decisions, context, alternatives considered, and expected outcomes

Creates accountability and helps combat hindsight bias

Build Psychological Safety

Ensure team members feel safe challenging ideas regardless of source

Celebrate changing your mind based on new evidence

Actively seek diverse perspectives

Cognitive biases aren't character flaws—they're part of being human. The difference between good and great product managers isn't the absence of biases but the awareness of them and the discipline to implement countermeasures.

Start by focusing on one or two biases you recognize in your own thinking, implement specific countermeasures, and gradually expand your toolkit. Your decisions will improve, your products will get better, and your teams will deliver consistently superior results.

Which cognitive bias do you struggle with most? Reply to share your experience—I read every response and often feature reader insights in future newsletters.

Great article Sohaib, thanks for sharing

Well explained!

It's time for me to revisit this classic piece by Kathy Sierra: https://headrush.typepad.com/creating_passionate_users/2007/03/how_to_host_a_p.html :)